Purdue 2.0: Exploring a New Model for IT/OT Management

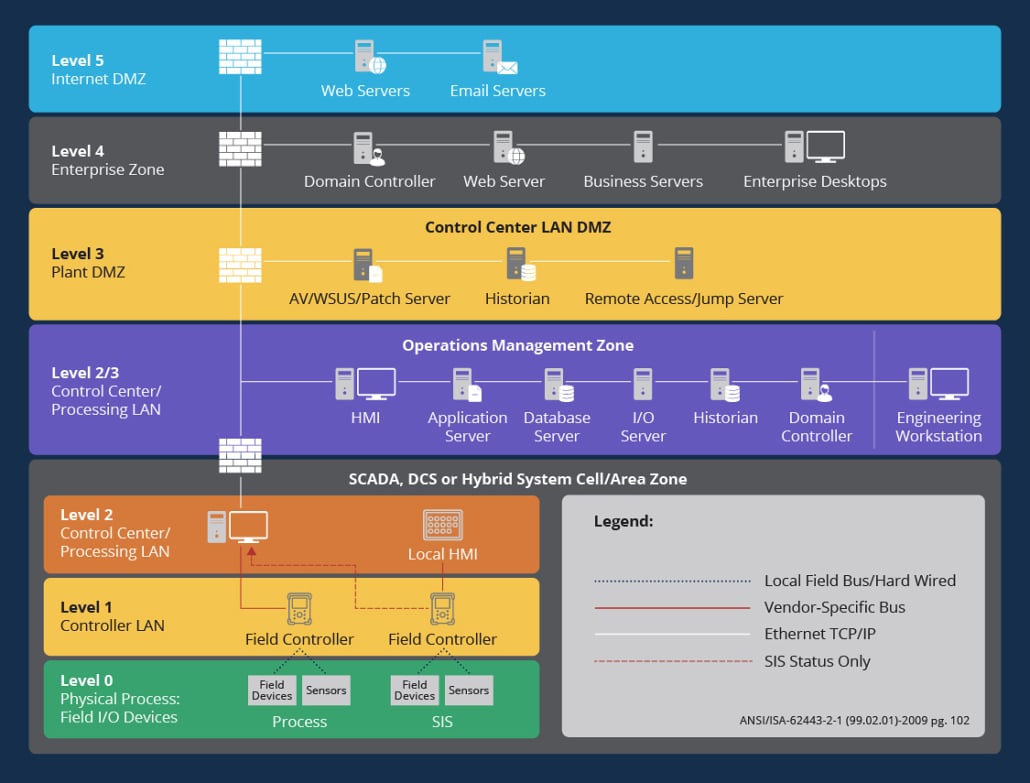

Developed in 1992 by Theodore J. Williams and the Purdue University Consortium, the Purdue diagram — itself a part of the Purdue Enterprise Reference Architecture (PERA) — was one of the first models used to map data flows in computer-integrated manufacturing (CIM).

By defining six layers that contain both information technology (IT) and operational (OT) technology, along with a demilitarized zone (DMZ) separating them, the Purdue diagram made it easier for companies to understand the relationship between IT and OT technologies and establish effective access controls to limit total risk.

As OT technologies have evolved to include network-enabled functions and outward-facing connections, however, it’s time for companies to prioritize a Purdue update that puts security front and center.

The Problem with Purdue 1.0

A recent Forbes piece put it simply: “The Purdue model is dead. Long live, Purdue.”

This paradox is plausible, thanks to the ongoing applicability of Purdue models. Even if they don’t quite match the reality of IT and OT deployments, they provide a reliable point of reference for both IT and OT teams.

The problem with Purdue 1.0 stems from its approach to OT as devices that have MAC addresses but no IP addresses. Consider programmable logic controllers (PLCs). These PLCs typically appear on MAC addresses in Layer 2 of a Purdue diagram. This need for comprehensive visibility across OT and IT networks, however, has led to increased IP address assignment across PLCs, in turn making them network endpoints rather than discrete devices.

There’s also an ongoing disconnect between IT and OT approaches. Where IT teams have spent years looking for ways to bolster both internal and external network security, traditional OT engineers often see security as an IT-only problem. The result is IP address assignment to devices but no follow-up on who can access the devices and for what purpose. In practice, this limits OT infrastructure visibility while creating increased risk and security concerns, especially as companies are transitioning more OT management and monitoring to the cloud.

Adopting a New Approach to Purdue

As noted above, the Purdue diagram isn’t dead, but it does need an update. Standards such as ISA/IEC 62443 offer a solid starting point for computer-integrated manufacturing frameworks, with a risk-based approach that assumes any device can pose a critical security risk and that all classes of devices across all levels must be both monitored and protected. Finally, it takes the position that communication between devices and across layers is necessary for companies to ensure CIM performance.

This requires a new approach to the Purdue model that removes the distinction between IT and OT devices. Instead of viewing these devices as separate entities on a larger network, companies need to recognize that the addition of IP addresses in Layer 2 and even Layer 1 devices creates a situation where all devices are equally capable of creating network compromise or operational disruption.

In practice, the first step of Purdue 2.0 is complete network mapping and inventory. This means discovering all devices across all layers, whether they have a MAC address, IP address, or both. This is especially critical for OT devices because, unlike their IT counterparts, they rarely change. In some companies, ICS and SCADA systems have been in place for 5, 10, even 20 years or more, while IT devices are regularly replaced. As a result, once OT inventory is completed, minimal change is necessary. Without this inventory, however, businesses are flying blind.

Inventory assessment also offers the benefit of in-depth metric monitoring and management. By understanding how OT devices are performing and how this integrates into IT efforts, companies can streamline current processes to improve overall efficiency.

Controlling for Potential Compromise

The core concept of evolving IT/OT systems is interconnectivity. Gone are the days of Level 1 and 2 devices capable only of internal interactions, while those on Levels 3, 4, and 5 connect with networks at large. Bolstered by the adoption of the Industrial Internet of Things (IIoT), continuous connectivity is par for the course.

The challenge? More devices create an expanding attack surface. If attackers can compromise databases or applications, they may be able to move vertically down network levels to attack connected OT devices. Even more worrisome is the fact that since these OT devices have historically been one step removed from internet-facing networks, businesses may not have the tools, technology, or manpower necessary to detect potential vulnerabilities that could pave the way for attacks.

It’s worth noting that these OT vulnerabilities aren’t new — they’ve always existed but were often ignored under the pretense of isolation. Given the lack of outside-facing network access, they often posed minimal risk, but as IIoT becomes standard practice, these vulnerabilities pose very real threats.

And these threats can have far-reaching consequences. Consider two cases: One IT attack and one OT compromise. If IT systems are down, staff can be sent home or assigned other tasks while problems are identified and issues are remediated, but production remains on pace. If OT systems fail, meanwhile, manufacturing operations come to standstill. Lacking visibility into OT inventories makes it more difficult for teams to both discover where compromise occurred and determine the best way to remediate the issue.

As a result, controlling for compromise is the second step of Purdue 2.0. RedSeal makes it possible to see what you’re missing. By pulling in data from hundreds of connected tools and sensors and then importing this data into scan engines — such as Tenable — RedSeal can both identify vulnerabilities and provide context for these weak points. Equipped with data about devices themselves, including manufacturing and vendor information, along with metrics that reflect current performance and behavior, companies are better able to discover vulnerabilities and close critical gaps before attackers can exploit OT operations.

Put simply? Companies can’t defend what they can’t see. This means that while the Purdue diagram remains a critical component of CIM success, after 30 years in business, it needs an update. RedSeal can help companies bring OT functions in line with IT frameworks by discovering all devices on the network, pinpointing potential vulnerabilities, and identifying ways to improve OT security.