Expert Insights: Building a World-Class OT Cybersecurity Program

In an age where manufacturing companies are increasingly reliant on digital technologies and interconnected systems, the importance of robust cybersecurity programs cannot be overstated. While attending Manusec in Chicago this week, RedSeal participated on a panel of cybersecurity experts to discuss the key features, measurement of success, and proactive steps that can lead to a more mature OT (Operational Technology) cybersecurity posture for manufacturing companies. This blog provides insights and recommendations from CISOs and practitioners from Revlon, AdvanSix, Primient, Fortinet, and our own Sean Finn, Senior Global Solution Architect for RedSeal.

Key features of a world-class OT cybersecurity program

The panelists brought decades of experience encompassing a wide range of manufacturing and related vendor experience and the discussion centered around three main themes, all complemented by a set of organizational considerations:

- Visibility

- Automation

- Metrics

Visibility

The importance of having an accurate understanding of the current network environment.

The panel unanimously agreed – visibility, visibility, visibility – is the most critical first step to securing the network. The quality of an organization’s “situational awareness” is a critical element towards both maximizing the availability of OT systems and minimizing the operational frictions related to incident response and change management.

Legacy Element Management Systems may not be designed to provide visibility of all the different things that are on the network. The importance of having a holistic view of their extended OT environment was identified in both proactive and reactive contexts.

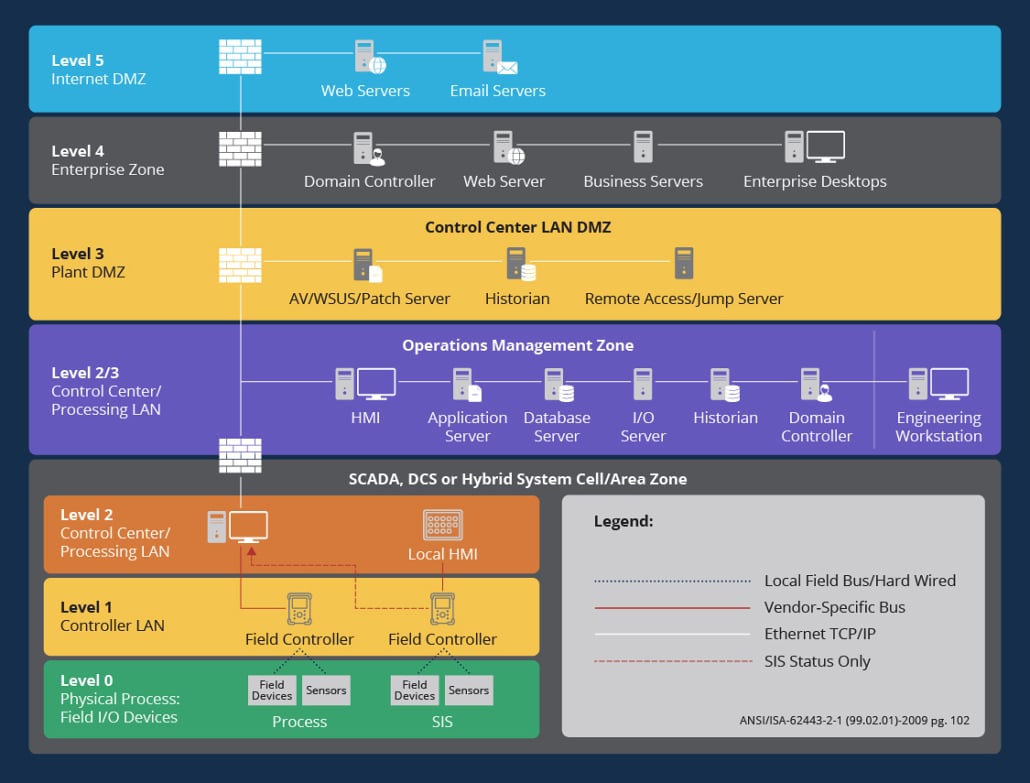

The increasingly common direct connectivity between Information Technology (IT) and Operational Technology (OT) environments increases the importance of understanding the full scope of available access – both inbound and outbound.

Automation

Automation and integrations are key components for improving both visibility and operational efficiency.

- Proactive assessment and automated detection: Implement proactive assessment measures to detect and prevent segmentation violations, enhancing the overall security posture.

- Automated validation: Protecting legacy technologies and ensuring control over IT-OT access portals are essential. Automated validation of security segmentation helps in protecting critical systems and data.

- Leveraging system integration and automation: Continue to invest in system integration and automation to streamline security processes and responses.

Metrics

Measuring and monitoring OT success and the importance of a cybersecurity framework for context.

One result of the ongoing advancement of technology is that almost anything within an OT environment can be measured.

While there are multiple “cybersecurity frameworks,” the panel was in strong agreement that it is important to leverage a cybersecurity framework to ensure that you have a cohesive view of your environment. By doing so, organizations will be better-informed regarding cybersecurity investments and resource allocation.

It also helps organizations prioritize and focus on the most critical cybersecurity threats and vulnerabilities.

The National Institute of Standards and Technology (NIST) cybersecurity framework was most commonly identified by practioners in the panel.

Cybersecurity metric audiences and modes

Different metrics may be different for very different roles. Some metrics are valuable for internal awareness and operational considerations, which are separate from the metrics and “KPIs” that are consumed externally, as part of “evidencing effectiveness northbound.”

There are also different contexts for measurements and monitoring:

- Proactive metrics/monitoring: This includes maintaining operational hygiene and continuously assessing the state of proactive analytics systems. Why would a hack want to get in? What is at risk and why does it matter to the organization?

- Reactive metrics/monitoring: Incident detection, response, and resolution times are crucial reactive metrics. Organizations should also regularly assess the state of reactive analytics systems.

- Reflective analysis: After incidents occur, conducting incident post-mortems, including low-priority incidents, can help identify systemic gaps and process optimization opportunities. This reflective analysis is crucial for learning from past mistakes and improving security.

Organizational Considerations

- Cybersecurity risk decisions should be owned by people responsible, and accountable for cybersecurity.

- Collaboration with IT: OT and IT can no longer operate in isolation. Building a strong working relationship between these two departments is crucial. Cybersecurity decisions should align with broader business goals, and IT and OT teams must collaborate effectively to ensure security.

- Employee training and awareness: Invest in ongoing employee training and awareness programs to ensure that every member of the organization understands their role in maintaining cybersecurity.

Establishing a world-class OT cybersecurity program for manufacturing companies is an evolving process that requires collaboration, automation, proactive measures, and continuous improvement. By focusing on visibility, collaboration, and a commitment to learning from incidents, organizations can build a strong foundation for cybersecurity in an increasingly interconnected world.

Contact RedSeal today to discuss your organizational needs and discover how RedSeal can provide unparalleled visibility into your OT / IT environments.

Notably absent is the acknowledgement that the attack did not happen at a single point or computer, and that the actual theft of data was allowed because the data looked like legitimate network traffic using allowed routes through and out of the network.

Notably absent is the acknowledgement that the attack did not happen at a single point or computer, and that the actual theft of data was allowed because the data looked like legitimate network traffic using allowed routes through and out of the network. It is embarrassingly common for us to find the majority of network management information in spreadsheets. Lists of devices, lists of firewall rules, hierarchies of networks. All laid out in nicely formatted tabs within multiple spreadsheet workbooks, often stored in SharePoint or Google Docs. But, always, devoid of context and the real meaning of the elements.

It is embarrassingly common for us to find the majority of network management information in spreadsheets. Lists of devices, lists of firewall rules, hierarchies of networks. All laid out in nicely formatted tabs within multiple spreadsheet workbooks, often stored in SharePoint or Google Docs. But, always, devoid of context and the real meaning of the elements.